Freedom Hosting 2: Overview

Freedom Hosting 2 Series

I’m a little late to the party on this post, but Freedom Hosting 2—a notorious shared hosting service on the dark web—was anonymously hacked in 2017. The hacker posted a dump of the databases and some of the configuration and code from the server. In this post, I’ll crack into the dump and show you a bit of my analytic process to make sense of this large mass of data.

Background

Freedom Hosting 2 was compromised by an anonymous hacker. Wired magazine picked up the story on February 6th, 2017, but the files indicate that the site was defaced on February 3rd. I’m not sure how long the hacker had access before making his/her presence known.

The hacker defaced the website and held the files for ransom at first. Later, he/she released a SQL database dump and a largely redacted tarball of the server’s filesystem. The hacker removed all of the customers' home directories because the hacker claimed there was an excessive amount of child exploitation material on Freedom Hosting. This is a claim I will evaluate as I dig into the data, but as a quick spoiler alert: yes, FH2 was serving massive amounts of child exploitation content.

Prior to the hack, Sarah Jamie Lewis had estimated that Freedom Hosting 2 was hosting 20% of all dark web sites. Soon after the hack, a few people published cursory analyses, but I have yet to see a real deep dive into this dataset. Therefore, this post is a step in that direction. I have a few ideas for follow up posts, so let us know on Twitter if you like this topic and want to see more.

A few disclaimers:

-

Freedom Hosting 2 did host a lot of horrible content, including child exploitation, human trafficking, nazism, and more. I will include warnings before discussing any of that content in greater detail, but be aware that it will be discussed.

-

I ordinarily do not condone the analysis and dissemination of stolen data. Just because something is stolen and published does not automatically make it fair game for all of us in the public to pry into. In this case, I am making an exception because Freedom Hosting 2 clearly served a lot of illicit and illegal content, and so this is a rare glimpse deep into the innards of the worst parts of the dark web. I will however avoid publishing any personally identifying details.

-

When I built the Dark Web Map, I masked off onions so as not to inadvertently create an onion directory that would point people at dangerous or illegal dark web sites. In my FH2 analysis, I will not mask onion names because all of these sites are now defunct.

With that out of the way…let’s get started!

Staging the data

I am focusing almost exclusively on the 2.2GB SQL database dump. Therefore, my first task is to load the SQL into a staging database. I don’t want to install MySQL, and I definitely don’t want to run an untrusted SQL script on my computer, so I decided to load this data inside a Docker container. This is a relatively safe approach to isolate the data as long as I use an unprivileged container and don’t mount sensitive directories inside the container.

I can see at the top of the SQL file that it was dumped with MariaDB 10.1.21, so I spun up a container with a version close to that:

$ docker run -v /mnt/freedom-hosting/:/data -EMYSQL_ALLOW_EMPTY_PASSWORD=1 mariadb:10.2.22-bionic

This starts up an empty MariaDB database with no root password. It is only accessible from my localhost, so nobody on my network can access it. And I have mounted a directory containing the SQL script into the container. Now I can access the container and load the SQL data.

$ docker exec -it fh2 /bin/bash

root@889446a892e7:/# mysql < /data/fhosting.sql

Staging this data takes a couple of hours, so I skimmed through the FH2 filesystem while this data was loading. The filesystem contain system password hashes, but they are the crypt 6 hashes (1,000 iterations of SHA-256) which are very slow to crack. Beyond that, I did not find anything else worth reporting. (Keep in mind that the anonymous hacker removed all of the customers' files due to concerns about illegal content.)

Getting oriented

When the data finished loading, my next step was to try to figure out at a high level what kinds of databases are in the dump. There are 10,992 separate databases in this dump: one for each customer, plus a few extra for metadata and management. This is far too many databases to go through them by hand, so I started by checking to see if any databases were empty, i.e. containing zero tables. It turns out that the vast majority of them are empty! Only 797 databases contain any tables. I assume that FH2 created a database for each site, even if that site did not need a database. This suggests that the majority of sites on FH2 were static sites, i.e. not data-driven.

Of the 797 databases that remain, almost all of them were named the same as an onion

address, e.g. onagsztj5apd62lh. Only 4 databases deviated from this pattern:

_fhostinginformation_schemamysqlperformance_schema

The last three are default databases built into MariaDB, so I ignored those. But the

_fhosting database is tantalizing! This appears to be the database that stores the

customer data and administrative data for FH2.

MariaDB [_fhosting]> show tables;

+---------------------+

| Tables_in__fhosting |

+---------------------+

| crontab |

| csrf |

| domains |

| ftp |

| pwchange |

| sql |

+---------------------+

6 rows in set (0.00 sec)

The domains table lists all of the onions hosted on FH2, including sites that were

“deleted” and therefore not included in the 10,992 sites mentioned above. This table

also contains an RSA private key for each site as well as a username and a password.

(The passwords are hashed using an unusual algorithm. A future post will dig deeper into

the passwords.) The other tables are less interesting.

An overview of FH2

At this point, I have narrowed down the starting data set to 793 remaining databases, each of which contains data for a single onion site. This is still too many databases to review by hand. There is a significant irony here: SQL databases are the epitome of “structured data”—it’s right there in the name!—but when you have hundreds of databases with different schemas, it starts to feel very unstructured. I wanted to build up an overview of these databases that might give me some leads for other lines of analysis.

I hypothesized that many of these sites would probably be running some open source software, like phpBB, which would produce consistent patterns of database tables. If I could find common table names across many different databases, then I could determine the prevalence of different types of software on FH2.

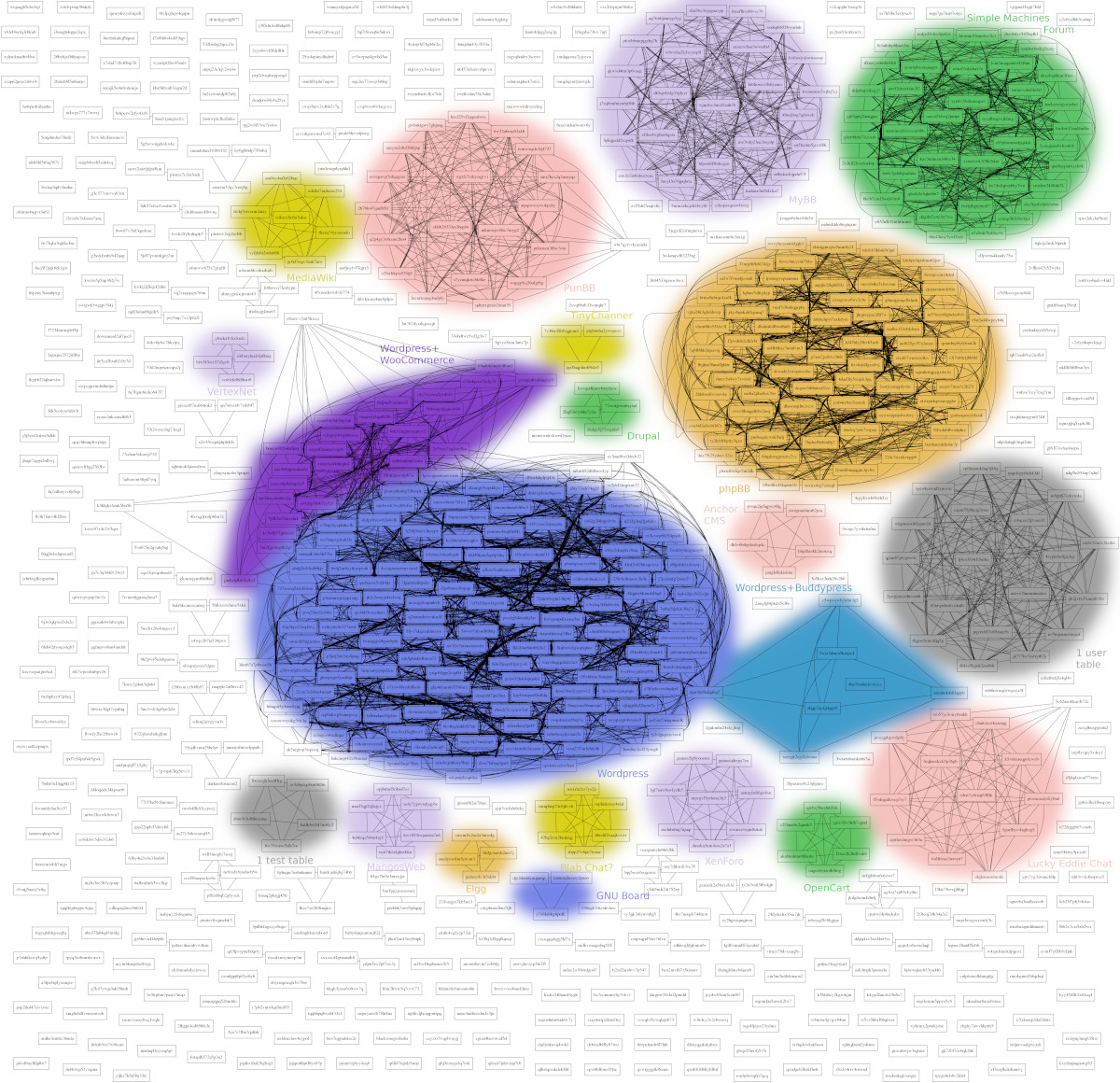

The solution to this problem is superficially similar to the method that I used in the Dark Web Map: I computed similarity scores between each pair of databases and then used a spring layout algorithm to visualize clusters of related databases.

The clustering step is a bit tricky. A lot of popular open source web software allows

you to specify a “prefix” for the tables that it generates. I.e. phpBB creates tables

called phpbb_posts and phpbb_users by default, but you could change the prefix to

something like foo and it would call the tables foo_posts and foo_users instead.

Therefore, I normalized all the table names by removing anything up and including the

first underscore, so that all phpBB tables would end up as just posts and users.

Then I computed the degree of overlap between the table names in each pair of databases. For example, if database 1 has tables named A and B, and database 2 has tables named B and C, then we take the number of tables in common (B) and divide by the total number of tables (A, B, and C) to get ~0.33. Statisticians call this Jaccard similarity, and it results in a score between 0.0 and 1.0 for each pair of databases. I created a graph representation by connecting all pairs of sites where score≥0.5, then used GraphViz to produce the rendering that you see above.

The rendering shows about 5-10 large clusters and about 25-30 clusters total. This clustering step whittled down the problem for me to something that I could start to review by hand, so my next step was to start looking at two or three databases in each cluster and try to figure out what software created the tables in each of those databases. I began labeling the clusters by hand and color coding each in order to visualize the contents clearly.

Download high-resolution version (5.1MB)

This blue cluster in the middle shows that WordPress was the most popular software on FH2. Note that Wordpress has additional plugins that may be installed, and those plugins may create additional tables, which modifies the similarity score between databases. This is why the blue WordPress blob has a purple lobe on one side: these are WordPress sites with the WooCommerce plugin installed, which essentially turns a WordPress blog into a full-blown e-commerce store. There are also a few sites running the BuddyPress plugin (colored in cyan) that turns the site into rudimentary social network.

The other labeled areas show highly prevalent forum software such as PunBB, MyBB, Simple Machines Forum, and phpBB. There is one large cluster of chat sites running “Lucky Eddie Chat” software.

There are two gray clusters which I labelled as uninteresting. One of the gray clusters consists of sites with only a single table named “test”, and the other cluster consists of sites with a single table named “user”. I guess those sites were built by people who were learning how to develop dynamic websites and didn’t get very far.

Digging into WordPress

With this overview of FH2 in hand, this now points me in several different directions where I could continue my inquiry. I am in the process of digging into each section a bit more deeply, with a particular interest in the interactive sites like forums, chat rooms, and marketplaces. I’ll finish this post with a brief excursion into WordPress, since this tends to be more of a publishing platform and less of an interactive platform.

Since I have a cluster of sites that are all running WordPress, I can now run a series of queries on each WordPress database to collect things like site name, number of users, number of posts, etc. Here are the names of all the sites with 20 or more posts.

This listing shows several prominent topics on the FH2 WordPress blogs: drugs, counterfeit items, bitcoin, and child exploitation. The site that has by far the most users is “Ranking de Sites Brasileiros Confiáveis”, which Google translates to “Ranking of Trusted Brazilian Sites by Users”. I have no idea what this site is for. ¯\_(ツ)_/¯

I also know from the visualization above that a significant number of sites have the WooCommerce plugin installed, so I can also grab names of products available across all of these marketplaces. Here is an arbitrary sample of those products:

- 100 x Alex Grey’s Hoffmann LSD Acid (100 x 100-125ug)

- 100×50 EURO FAUX BILLETS HAUTE QUALITÉ

- 20x LSD 110ug Buddha California Sunshine Frete Grátis

- 72% pure Speed 10g

- Alpha Relax

- Bolivian Cocaine 10 grams

- Cartela Homer Simpsons 25i 25x NBOMe 1200ug Frete Grátis

- Colombian Cocaine 500 grams

- DirecTV Online Streaming Account

- Doručení zdarma

- Minecraft Account

- Netflix Premium Account

- Paypal Balance > 800 USD

- Skrill Account

- Spotify Premium Account

- VISA physical

- WWE Network [LIFETIME + FREEBIES]

Again, we can see lots of drug items for sale here. There are also a suprising number of stolen accounts available. I can understand the black market value of a stolen PayPal account with an $800 balance, but I am bit surprised that they are selling Netflix and Minecraft accounts!